Credit goes to Tendem - the hybrid AI+Human agent that I develop myself :)

Text classification is one of the most common tasks in natural language processing. It is used to categorize reviews by sentiment, classify user queries by intent, sort documents by topic, and much more. There are numerous approaches to solving this problem, from traditional keyword-based methods to deep learning models that leverage semantic understanding (transformers and the whole family of LLMs).

Another approach that became popular with the widespread use of LLMs is the few-shot technique. The idea is quite simple: let’s take a description of the task, a couple of examples, show it all to the model, and ask it to classify the new input. This approach has many advantages compared to traditional methods:

- It is very easy to implement. You don’t need to train anything - just grab an LLM’s API, write a prompt, and you’re done. It lowers the entry barrier significantly, so non-ML engineers (or even non-engineers altogether) can use it to solve their problems.

- It is very flexible. To solve a different problem you can use the same model and only change the prompt and the examples.

- It is very powerful. LLMs are trained on a huge amount of data, so they already know a lot about the world - what certain words and phrases mean, how they relate to each other, and so on.

- It is very data-efficient. You don’t need to collect and label a large dataset to train a model (as we used to do before) - just a few examples and a good explanation of the task are enough to get good results.

Few-shot classification became possible thanks to so-called “in-context learning” - the ability of LLMs to learn not only from the training data they were initially trained on, but also from the input they receive at inference time. You can provide the model with a description of the task, a couple of examples of how to solve it, and then simply ask it to do the same for a new input.

In this article I will study (and somewhat compare) different few-shot techniques for text classification using LLMs (as there are different ways to “select” the “best” examples to show to the model; and yes, it does affect the performance). I will also outline some advantages and disadvantages of each approach, and write a small guide on when to use which one.

Few-shot classification is not a silver bullet. It is really a good starting point, a solid baseline to improve a model’s performance further. But if you want to get the best results, the best inference-time performance, or just want to have more control over the model’s behavior, you will most likely need to train a custom model with all the hustle that comes with it.

The problem - scientific paper classification

For the sake of simplicity (and because I already have a dataset for this, and I don’t want to waste a lot of inference time and money on this experiment), I will use a dataset of scientific papers that I collected a while ago - WOS-11967. I already used it in the article about text classification on extra-small datasets, but here the problem will be a bit different. I will use paper keywords to classify articles into 35 scientific areas from 7 different domains (5 areas per domain). So, the task is to predict the area of a scientific paper based on its keywords.

Again, for the sake of simplicity and demonstration, I will use a balanced split of the dataset:

| Split | # of domains | # of areas per domain | # of examples per area | Total examples |

|---|---|---|---|---|

| Train | 7 | 5 | 20 | 700 |

| Val | 7 | 5 | 10 | 350 |

| Test | 7 | 5 | 50 | 1750 |

| Total | 7 | 5 | 80 | 2800 |

The Train split will be used to select the few-shot examples, the Val split - to potentially optimize the shots in one way or another, and the Test split - to evaluate the final performance of the model. Here’s a small sample of the dataset just to give you an idea of what it looks like:

| Keywords | Scientific Area | Domain |

|---|---|---|

| growth; tyrosine kinase inhibitors; resistant; Philadelphia-positive acute lymphoblastic leukemia; mechanism; bone marrow stromal cells | polymerase chain reaction | biochemistry |

| Qualitative research; rigour; trustworthiness; impact evaluation; evidence-based policy | attention | Psychology |

| e-learning; educational resources; sequencing; interoperability | computer programming | CS |

| Monte Carlo; Depletion; Thermal-hydraulics; Coupling; Sub-step; BGCore | hydraulics | MAE |

| Agile; Scrum; Web Engineering; CMMI; Software Engineering | software engineering | CS |

The model design - prompting and inference

For all methods that I will explore here, I will use the same design and model, and only tweak the way I select the few-shot examples. Model: GPT-5-Nano. It’s the fastest, cheapest version of GPT-5, and yet it’s quite capable for text classification tasks. Inference design:

- I will use the same system prompt for all methods, which briefly describes the task and the available classes (scientific areas).

- Each few-shot example will consist of two conversation turns: the first one will be the user turn with the keywords of a paper, and the second one will be the assistant turn with the correct scientific area.

- Assistant turns are subject to structured decoding, meaning that I will define a strict JSON format for the model’s output, and it will be forced to follow it.

The format of this JSON will be the following:

{

"scientific_area": "the predicted scientific area, choice restricted to the 35 available areas"

}

So the overall conversation will look like this:

[

{"role": "system", "content": "<system prompt>"},

{"role": "user", "content": "Classify the following set of keywords: <keywords of the paper>"},

{"role": "assistant", "content": "{\"scientific_area\": \"<the correct scientific area>\"}"},

...

{"role": "user", "content": "Classify the following set of keywords: <keywords of the INPUT paper to classify>"}

]

Structured decoding prevents models from hallucinating non-existent classes and makes the output more consistent and easier to parse.

Note that there are different ways of “providing” few-shots to the model and generating outputs. For example, one can simply put all the few-shots into the system prompt or even into the first user turn. Outputs can be plain text as well (since it’s also possible to restrict them with regular expressions, for instance). I prefer the design described above, as it’s easier to maintain using pydantic schemas, and it’s more straightforward for the model from a behavioral perspective: the user gives an input, the assistant gives an output, and so on, so the model aligns better with the task given the “previous” behavior.

Metrics

I’ll keep this simple as well. To evaluate a method’s performance, I will use accuracy and macro F1-score. Accuracy is suitable here as the dataset is balanced, and macro F1-score will give us a better understanding of how the model performs across different classes, especially if some classes are harder to classify than others. If the model systematically misclassifies a certain class, its F1-score will be very low, and macro F1 will reflect that.

Few-shot techniques

Zero-shot prompting

The most basic approach to few-shot classification is… not to provide any examples at all. Let’s simply describe the task to the model and ask it to classify the input based on that description. This approach is called zero-shot prompting. You can find the system prompt that I used in this experiment on github. It contains a brief description of the task and a list of all available scientific areas grouped by domain. It doesn’t explain areas in detail, as I want to see how much the model can do just based on the names of the areas and its general knowledge about them. Also, this should increase the importance of the few-shot examples, as they will be the only source of information about “how to classify” for the model.

This approach yields the following results on the test set:

| Metric | Value |

|---|---|

| Accuracy | 0.631 |

| Macro F1 | 0.632 |

This will be our baseline. Performing worse than that means the few-shot examples are not helpful, and we only confuse the model by providing them. Performing better will mean the few-shot examples are helpful, and we can potentially optimize their selection to get even better results.

Overall, I would say that the results are quite good for zero-shot prompting, given that we have 35 classes to choose from.

Random few-shot selection

Probably the most straightforward way to select few-shots is to simply randomly sample a few examples from the training set. This approach is called random few-shot selection. An important note here is that since we have 35 classes, we may end up selecting examples from only a few of them and not covering some others, which may not be ideal for the model’s performance. So we’ll compare two approaches here: one with completely random selection, and another one with stratified random selection, where we ensure that we select the same number of examples from each class.

Here and below I will use 70 few-shot examples in total, and 2 examples per class for the stratified approach (which results in the same total number of examples). So in total I will always provide the model with 70 examples, but the way I select them will differ.

The number of few-shot examples does matter, and it is a hyperparameter that can be optimized as well. I chose 70 examples for this experiment as it’s a good balance between providing the model with enough information about the task and not spending a lot of time/money on inference (as the more examples we provide, the more tokens we use, and the more expensive the inference becomes).

The results of random few-shot selection are the following:

| Metric | Value |

|---|---|

| Accuracy (70 total) | 0.636 |

| Macro F1 (70 total) | 0.624 |

| Accuracy (stratified) | 0.645 |

| Macro F1 (stratified) | 0.637 |

We can see two things here. First, random few-shot selection does improve the performance a bit compared to zero-shot prompting, which means that the examples do provide some useful information to the model. Second, stratified random selection performs better than completely random selection, which means that providing examples from all classes is beneficial for the model’s performance. I would say that the results align with my expectations (which is a good sign).

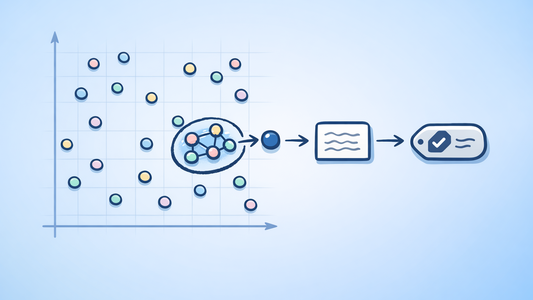

K-nearest neighbors few-shot selection

Spaces, embeddings, neighbors, and the whole party :)

This approach is based on the following idea: instead of randomly selecting examples from the training set, let’s select examples that are more similar to the one we want to classify. The intuition behind this is that if we provide the model with examples similar to the input, it will be easier for the model to understand what we want from it. These similar examples could also belong to different classes, which may help the model better understand the boundaries between classes and thus improve its performance.

A very important note here is that this approach is much more expensive than the previous ones. First, we need to compute embeddings for all examples in the training set and store them somewhere. Second, for each input we want to classify, we need to compute its embedding and then find the nearest neighbors in the embedding space. This adds some overhead to the inference time. Lastly, these 140 turns (70 examples with 2 turns each) most likely will be different for each input, which means that we won’t be able to make use of prefix caching to speed up the inference and lower its cost (as the only common part between different inputs will be the system prompt).

There is another concern about few-shot selection — how to rank the examples and where to place them in the conversation. Should we put the closest examples first (right after the system prompt), or should we put them last (right before the input we want to classify)? Or should we randomly shuffle them? I won’t be extensively testing all these options and will simply put the closest examples last, as in my opinion it makes more sense to show the model the most relevant examples right before asking it to classify the input, so it can better grasp what we want from it.

Regarding embeddings — I will use OpenAI’s text-embedding-3-large model with cosine similarity as the distance metric to find the nearest neighbors. I will also select only the top 20 closest examples from the training set, as the cost of this approach is much higher.

The results of k-nearest neighbors few-shot selection are the following:

| Metric | Value |

|---|---|

| Accuracy (20 total) | 0.707 |

| Macro F1 (20 total) | 0.696 |

A significant improvement compared to the previous approaches! Providing the model with examples that are more similar to the input definitely helps it better understand the task and thus improve its performance. But it comes at the cost of much higher inference time and expense.

“Hardest” few-shot selection

Another idea goes like this: let’s show the model examples that it finds the hardest to classify. Why? Because if the model finds certain examples easy to classify, it means it already understands how to handle them, and providing those won’t add much value to the model’s performance. On the other hand, if the model finds some examples hard to classify, it means it doesn’t fully understand their characteristics, and providing them may help the model better understand the differences and boundaries between classes, thus improving its performance.

This method is very prone to outliers and label errors. If the model finds some example hard to classify because it’s mislabeled, providing it to the model not only won’t help but may actually hurt the performance. So it’s important to be very careful with this approach. You should consider removing potential outliers and label errors from the training set before applying this method.

To find the hardest examples, I will simply train a logistic regression model on the training set (on the embeddings of the examples), and then choose the ones with the highest cross-entropy loss.

where \(\hat{y}_i\) is the predicted probability of the correct class for example \(i\).

Just as before, I first select the top 70 hardest examples and then compare it to taking the 2 hardest examples from each class.

| Metric | Value |

|---|---|

| Accuracy (70 total) | 0.582 |

| Macro F1 (70 total) | 0.589 |

| Accuracy (stratified) | 0.602 |

| Macro F1 (stratified) | 0.594 |

Metrics are quite bad here. I didn’t dive deep into the analysis of the results, but I suspect that there are some label errors or outliers in the dataset that we chose to show to the model, thereby degrading its performance. However, performing a stratified selection yields slightly better results, which aligns with the expectations and previous observations.

Note that I’ve cheated here a bit. Instead of taking the hardest examples for the model I’m using, I use a proxy model to find them. I do that because I really want to rank all the samples in the training set, and acquiring proper probabilities using LLMs is another not-so-trivial problem.

Diversity-based few-shot selection

Here’s another idea: instead of taking the “hardest” examples, let’s take a “diverse” subset of the training set. That way we will “cover” more different examples, and the model will better understand the general characteristics of the classes and the decision boundaries between them.

Sounds great! But how do we select the most “diverse” subset? Or even just a “diverse” subset? How do we measure diversity? And what’s the algorithm to do that in a reasonable time? We definitely don’t want to try every possible combination:

That’s not feasible at all.

K-Medoids selection

One option is the k-medoids algorithm. You’ve probably heard about k-means, which is a popular clustering algorithm. This is almost the same, but instead of using some “centroid” to represent each cluster, k-medoids uses actual examples from the dataset as centers.

I won’t dive into its algorithmic details here; fortunately, there are already libraries that implement it. I will use the scikit-learn-extra library, which provides an implementation of the k-medoids algorithm. The API is very simple:

import numpy as np

from sklearn_extra.cluster import KMedoids

def select_k_medoids(

embeddings: np.ndarray,

k: int,

random_seed: int = 42

) -> list[int]:

kmedoids = KMedoids(

n_clusters=k,

metric='cosine',

method='pam',

init='k-medoids++',

max_iter=500,

random_state=random_seed

)

kmedoids.fit(embeddings)

return kmedoids.medoid_indices_.tolist()

This approach gives us the following results:

| Metric | Value |

|---|---|

| Accuracy (70 total) | 0.634 |

| Macro F1 (70 total) | 0.626 |

| Accuracy (stratified) | 0.661 |

| Macro F1 (stratified) | 0.658 |

While general dataset medoids didn’t yield better results than random selection, stratified medoids (i.e., taking two medoids from each class) performed much better. Yes, it’s worse than k-nearest neighbors, but its prompt is fixed, making the inference much faster and cheaper.

Determinantal Point Processes

This is where things get a bit more complicated. But I’ll try to keep it as simple as possible (taking into account that I don’t fully understand the math behind it myself).

Suppose we have a set of \(n\) vectors from the \(k\)-dimensional space. We want to select a subset of these vectors that are as “spread out” as possible (meaning that they are not too similar to each other). Can we somehow quantify this “spread-out-ness”? We actually can — we can measure the volume of the parallelotope formed by these vectors. The larger the volume, the more “spread out” the vectors are. To calculate the volume, we can use the Gram matrix of the vectors, which is a matrix of their pairwise inner products. Its determinant is the square of the \(n\)-dimensional volume of the parallelotope formed by the vectors.

This approach is also used to determine the linear independence of a set of vectors. If the determinant of the Gram matrix is zero, it means that the vectors are linearly dependent.

Let’s see how it works on an example (3 vectors, 3-dimensional space).

Case A: some random vectors.

The results are somewhat expected; as we can see, some vectors are quite similar (have high inner products), and the determinant (the squared volume) is not that high.

Case B: orthogonal vectors.

As we can see, the vectors are completely different (orthogonal), and the determinant is the highest possible.

Note that this method is somewhat “unstable” in the sense that if we have two very similar vectors, the determinant will be very close to zero. And if we add a new random vector to the set of orthogonal vectors, the determinant will drop to 0, as the set will become linearly dependent. It is also sensitive to vector scaling, so normalizing the vectors is important to achieve “pure diversity-based selection”.

Now on to DPP. Suppose we have a square matrix \(L\) of size \(n \times n\). A DPP defines a probability distribution over subsets, where the probability of selecting a subset \(S\) is:

where \(L_S\) is the submatrix of \(L\) indexed by items in \(S\). The higher the determinant of the submatrix, the higher the probability of selecting that subset.

In our case \(L = E \cdot E^T\), where \(E\) is the matrix of embeddings of the examples in the training set. \(E_{ij} = \left\langle e_i, e_j \right\rangle\), where \(e_i\) is the embedding of example \(i\):

- Diagonal entries \(L_{ii} = {\Vert e_i \Vert}^2\) represent the “quality” of each example (equal to \(1\) if we normalize the embeddings).

- Off-diagonal entries \(L_{ij} = \left\langle e_i, e_j \right\rangle\) represent the similarity between examples \(i\) and \(j\).

So, the DPP will assign higher probabilities to subsets of more “diverse” examples (with lower similarities):

- Vectors that are spread apart (diverse) → large volume → high probability;

- Near-duplicate vectors → near-zero volume → near-zero probability.

Thankfully (again), there are already libraries that implement DPP sampling. I will use the DPPy library, which provides an implementation for DPP sampling (even restricting it to a fixed number of items). The API is very simple as well:

import numpy as np

from dppy.finite_dpps import FiniteDPP

k = 70 # number of examples to select

random_seed = 42

embedder = ... # some embedding model

X = [...] # list of examples in the training set

all_embeddings = np.array(embedder.get(X))

normed = all_embeddings / np.linalg.norm(all_embeddings, axis=1, keepdims=True)

dpp = FiniteDPP('likelihood', L=(normed @ normed.T))

dpp.sample_exact_k_dpp(size=k, random_state=random_seed)

selected_indices = dpp.list_of_samples[0]

With this approach we get the following results:

| Metric | Value |

|---|---|

| Accuracy (70 total) | 0.657 |

| Macro F1 (70 total) | 0.655 |

| Accuracy (stratified) | 0.627 |

| Macro F1 (stratified) | 0.621 |

First, general DPP selection performs better than general k-medoids selection, and is even comparable to stratified k-medoids selection. Second, stratified DPP selection performs worse than general DPP selection, which is quite surprising. I think we face the same problem as with the “hardest” selection — there are some outliers or label errors in the dataset, and by selecting the most “dissimilar” examples we end up picking these outliers, which degrades the model’s performance.

Another important note here is that with both k-medoids and DPP selection we select some “diverse” examples, and not necessarily the “most informative” ones or the ones closest to the decision boundaries.

Importance-based few-shot selection

There is an entire family of methods that belong to the category of Most Influential Subset Selection (MISS). The idea is to select “a subset of training samples with the greatest collective influence.” I won’t dive into the methods that belong specifically to this category, but I will try to implement a simple version of it - an importance-based few-shot selection.

The idea is quite simple: let’s define the “importance” for each example in the training set, and then select \(k\) examples with the highest importance. The importance of an example can be defined in different ways, but I’ll use the following approach:

\(p\) is a vector of weights that we will optimize on the validation set, and \(f_i\) is a vector of features for example \(i\). Entropy is calculated the same way as in the “hardest” selection. Consistency is the fraction of examples in the vicinity of example \(i\) that belong to the same class as \(i\) (\(k_c\)-nearest neighbors). Density is the inverse of the average distance from example \(i\) to other examples in its vicinity (\(k_d\)-nearest neighbors).

- The higher the entropy, the more “uncertain” the example is, and thus the more “important” it is to provide to the model.

- The higher the consistency, the more “representative” the example is for its class, and thus the more “important” it is to provide to the model.

- The lower the density, the more “unusual” the example is, and thus the more “important” it is to provide to the model.

This method is prone to the same problems as the “hardest” and “diverse” selection — it can select outliers and label errors, which can degrade the model’s performance. So it’s important to either manually check the selected examples or use some dataset cleaning techniques.

After optimizing the weights on the validation set, I ended up with the following values:

And the results on the test set are the following:

| Metric | Value |

|---|---|

| Accuracy (70 total) | 0.663 |

| Macro F1 (70 total) | 0.657 |

| Accuracy (stratified) | 0.644 |

| Macro F1 (stratified) | 0.631 |

Conclusion

Let’s first summarize the results of all the methods in one table:

| Method | Accuracy (non-stratified) | Macro F1 (non-stratified) | Accuracy (stratified) | Macro F1 (stratified) |

|---|---|---|---|---|

| Zero-shot prompting | 0.631 | 0.632 | ||

| Random selection | 0.636 | 0.624 | 0.645 | 0.637 |

| K-nearest neighbors | 0.707 | 0.696 | ||

| “Hardest” selection | 0.582 | 0.589 | 0.602 | 0.594 |

| K-medoids selection | 0.634 | 0.626 | 0.661 | 0.658 |

| DPP selection | 0.657 | 0.655 | 0.627 | 0.621 |

| Importance-based selection | 0.663 | 0.657 | 0.644 | 0.631 |

Overall, we can observe the following trends:

- Providing few-shot examples to the model does improve its performance compared to zero-shot prompting;

- The way we select few-shot examples does matter;

- Random selection is a good baseline but is not the best approach;

- Stratified selection (ensuring that we select examples from all classes) is generally better than non-stratified selection;

- Some methods may be prone to selecting outliers and label errors;

- K-nearest neighbors selection yields the best performance, but it is also the most expensive in terms of inference time and cost;

- K-medoids and DPP selection provide a good balance between performance and inference cost.

The method you choose will depend not only on the performance you want to achieve but also on the inference time and cost constraints you have. If you want to get the best possible results and don’t care about the inference cost, k-nearest neighbors selection may be a good choice. If you want to achieve good results with a fixed prompt and lower inference cost, diversity methods may come in handy. However, all of this should be taken with caution, as the results may vary depending on the dataset, the model, and the specific implementation details.

You can find the code for this article on my GitHub.